Everything’s fine (nope.) — Spot, the not-at-all terrifying robot dog from Boston Dynamics, can now make decisions and express itself thanks to ChatGPT.

In a post published on its blog, the American robotics company expressed its interest in the advancements of artificial intelligence models that power chatbots like ChatGPT.

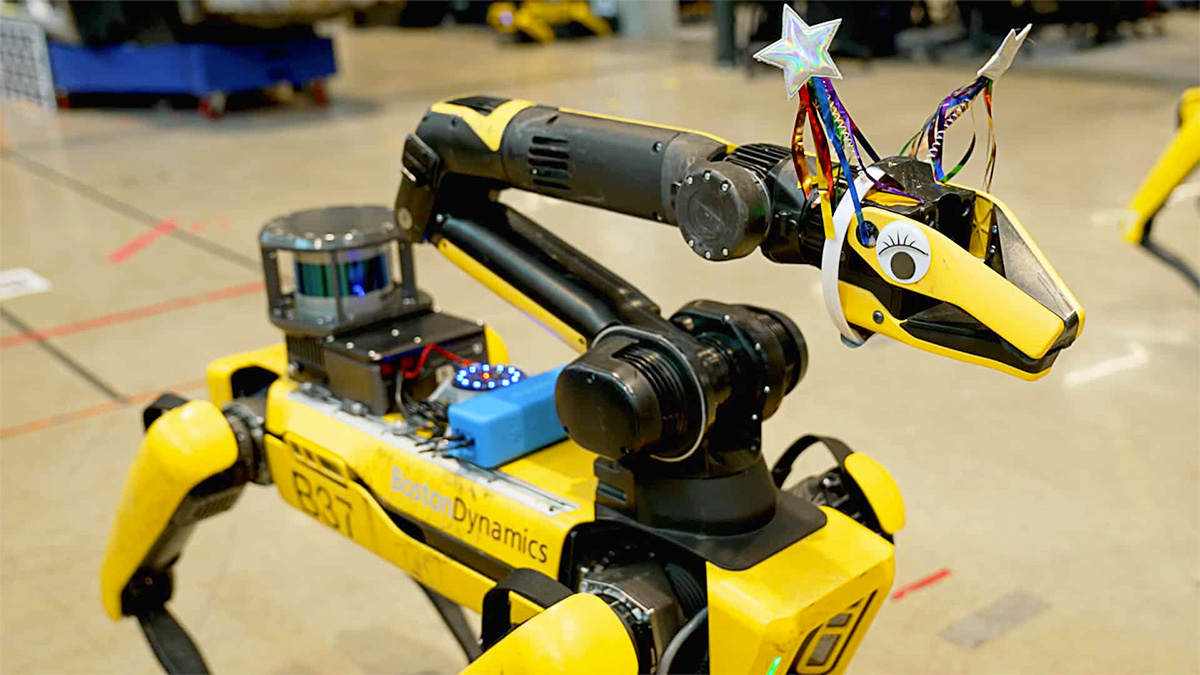

Nice doggy

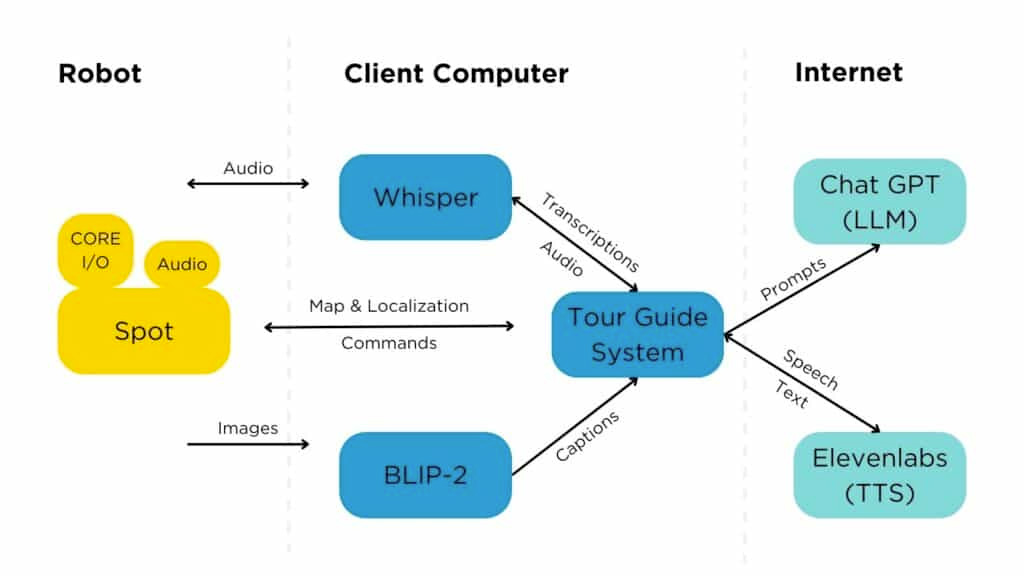

Boston Dynamics has developed a demo version of its famous robot dog, leveraging this kind of model to allow it to make real-time decisions.

The result is a “tour guide” robot capable of navigating an environment, observing objects, and relying on AI to describe them.

The robot can also answer questions from its audience and plan its actions.

To do this, Spot is equipped with a speaker and microphone, connected to ChatGPT and Whisper’s API, OpenAI’s multilingual voice recognition AI. Whisper transcribes spoken language into text, which is then processed by ChatGPT to generate an appropriate response.

The Boston Dynamics teams also shared the prompts that set the conversational framework for the robot on their blog.

To enhance the illusion of conversation, Boston Dynamics uses its robot’s arm and articulated clamp to animate it when speaking. Small eyes and a few disguises were even added for the occasion.

The video below shows the robot dog in action:

Did you say creepy?

During their tests at Boston Dynamics’ premises, engineers admit to being surprised by some of the robot’s reactions.

When asked about Marc Raibert — none other than the founder and former CEO of the robotics company — the robot said it didn’t know and thought it wise to ask the IT support staff, which it promptly did on its own.

Even more surprising, when engineers asked it about its “parents”, the robot went to where older versions of “Spot” robots are displayed (Spot V1 and Big Dog), and answered that these were its “elders”.

Everything’s fine, right?

Despite everything, the robot dog highlighted some shortcomings of ChatGPT. When asked about Boston Dynamics’ “Stretch” robot, designed for heavy lifting, the robot responded that its purpose was for yoga (referring to the English word meaning “to stretch”).

The latency before the robot provides its response can sometimes be quite long, occasionally reaching up to 6 seconds, somewhat breaking the interaction effect.

Nevertheless, Boston Dynamics is enthusiastic about this test and already envisions use cases where robots could communicate about their tasks with the humans overseeing them.

They could prove useful in sectors such as industry, construction, or even, possibly, in tourism or entertainment.

The story doesn’t mention whether the creators of Black Mirror have started brainstorming a new episode for their series.

Also read on The Coding Love:

- 🚘 Following too many incidents, California calls an end to autonomous cars on its roads

- 📈 Over a million developers now pay for GitHub Copilot

- 🧊 Microsoft creates revolutionary technology capable of storing data in glass for 10,000 years

- 🛠️ Tesla opens its API for third-party apps in its cars