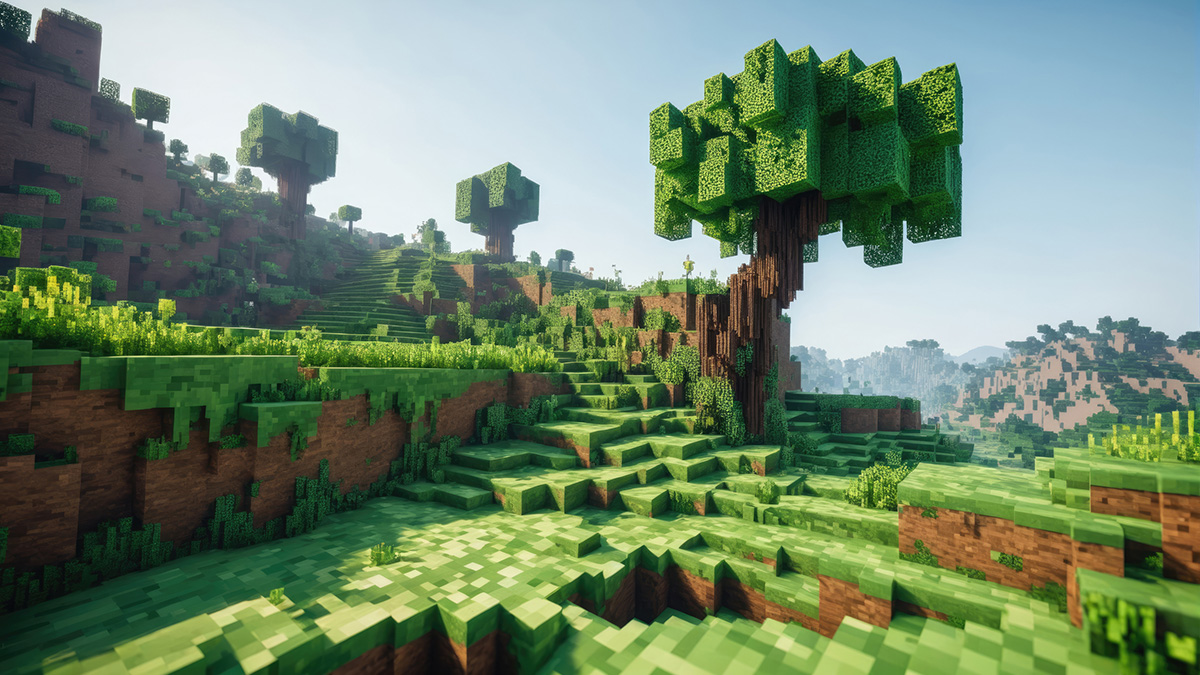

Much more fun and creative than traditional AI benchmarks, a group of developers had the clever idea to pit various AI models against each other by having them build… Minecraft models!

The site MC-Bench — short for “Minecraft Benchmark” — invites visitors to evaluate the performance of today’s top AI engines by generating Minecraft creations for the best-selling game in the world.

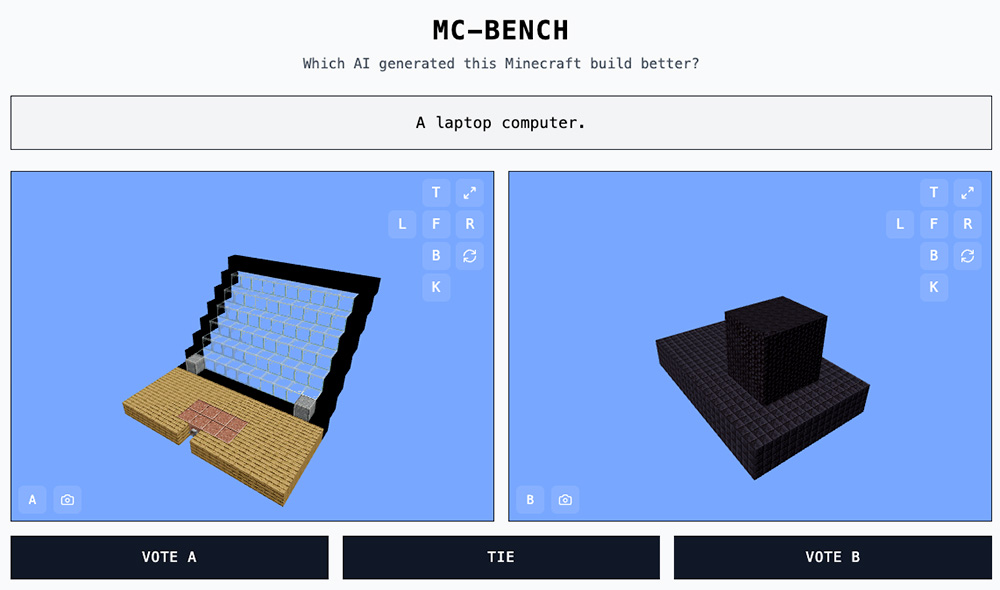

The concept is simple: the competing AIs are given a prompt (such as “a hot dog”, “a coffee mug”, or more elaborate scene descriptions) and must generate code to build the creation in Minecraft.

Users are then invited to vote for the best build without knowing which AI model created it. Only after voting do they discover which AI was behind each (possibly questionable 👀) blocky masterpiece.

Rather than digging through code to understand what works, users can simply judge by appearance — making the benchmark accessible to a much broader audience.

At the origin of the project is Adi Singh, an American high school senior. For him, the strength of Minecraft lies in its popularity: everyone knows the game. Even if you’ve never played it, it’s easy to tell whether a build looks like a pineapple or a beach hut.

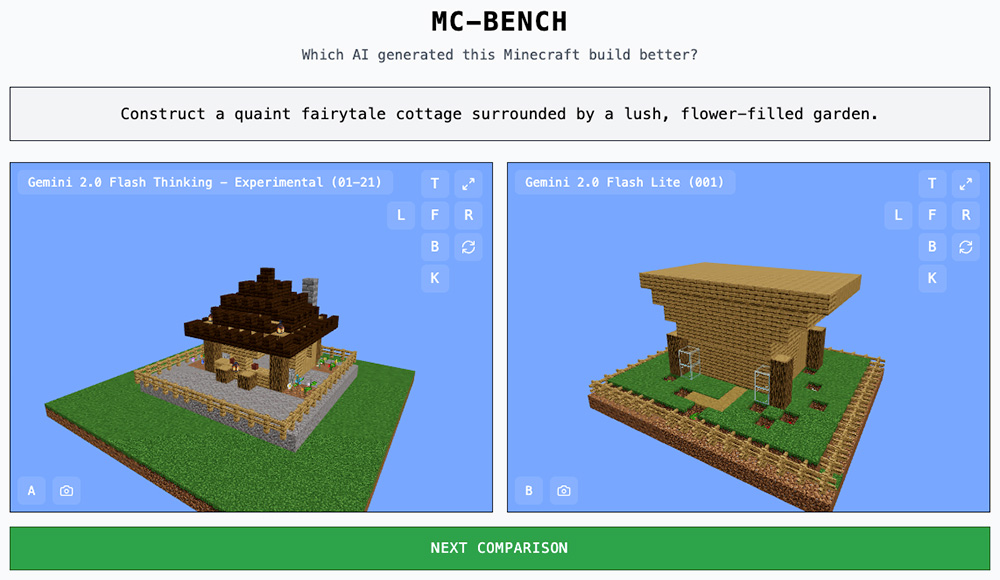

Beyond the entertainment factor, MC-Bench also highlights how some AIs that shine in traditional benchmarks don’t necessarily achieve the same level of performance in other types of use cases.

The MC-Bench results — where Claude Sonnet performs particularly well (with versions 3.7 and 3.5 topping GPT-4.5) — provide a more realistic view of what an average user might experience, compared to purely text-based benchmarks.

The project is currently maintained by eight contributors, and companies like Anthropic, Google, OpenAI, and Alibaba have provided support by allowing their models to be used for testing — though they are not officially involved.