Bip bap boop — I shouldn’t have to tell you much if I say that the recent release of the Chinese model DeepSeek R1 has caused massive turbulence in the artificial intelligence field, shaking even well-established giants like Nvidia, OpenAI, and Meta.

Within Zuckerberg’s group, crisis units have reportedly been set up to understand how such an inexpensive model managed to achieve such impressive performance.

DeepSeek R1, which is significantly less resource-intensive than OpenAI’s models (the Chinese startup reportedly uses barely 1% of OpenAI’s equivalent resources for its model), has proven to be more efficient than the latest model from the American AI mega-startup (which says a lot about the monumental thrashing, if you’ll allow the expression).

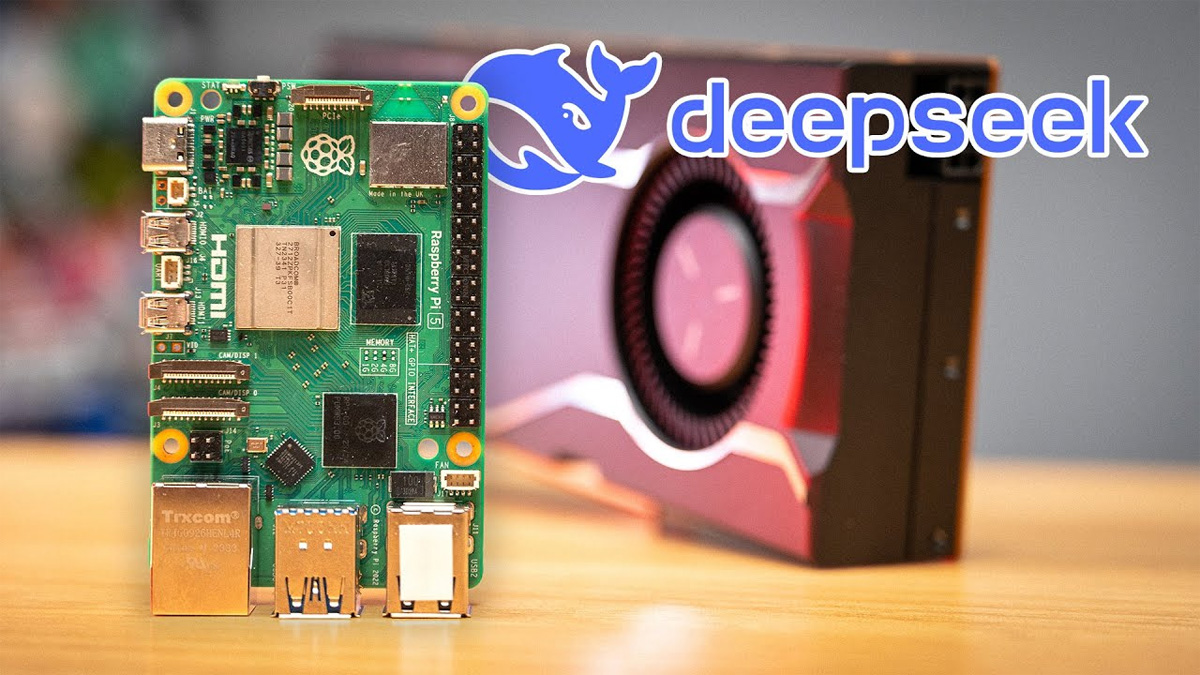

DeepSeek R1 on a Raspberry Pi

The prospect of running an AI model on affordable hardware has naturally caught the attention of the Raspberry Pi developer community, which thrives on technical challenges of all kinds. This community has been striving for some time to make such models run on the simplest and most accessible microcomputer in the world.

As demonstrated by developer Jeff Geerling in his latest YouTube video, the publicly shared model from DeepSeek is technically capable of running on a Raspberry Pi 5.

However, let me temper your enthusiasm right away: don’t expect to enjoy the globally acclaimed performance of this model when running it on this adorable little machine. 😉

To make his project work, Jeff Geerling had to dig into the version history of DeepSeek and deploy the 14b version of the model on his microcomputer—the version currently highlighted by DeepSeek is the 671b (the one supposedly crushing ChatGPT), which weighs no less than 400GB.

Even with this lighter version of the model, his Raspberry Pi managed to generate 1.2 tokens per second. In other words, when you ask it a question, expect to wait about a second for each word to appear. If you’re really in a hurry, well… it might still be worth a try. 👀

As Jeff Geerling points out in the article dedicated to this project on his blog, it is possible to significantly improve performance by integrating an external graphics card, as GPUs and their associated VRAM are much faster than CPUs and system memory.

With the help of an AMD W7700 card (he went a bit overboard with the budget there 😅), the developer managed to reach an average speed of 24 to 54 tokens per second.

But what’s truly impressive is the overall feat of the project. Until now, when it came to running AI on a Raspberry Pi, we were mostly used to sending requests to a remote server rather than running the model directly on the microcomputer itself!

You can learn more by watching Jeff Geerling’s video here:

(Illustration image: Jeff Geerling on YouTube)